0. 前言 新装pytorch之后,可视化工具visdom就不能用了,所以改用tensorboard,以下是tensorboard及其变体的使用命令。

1. 原理 tensorboard与visdom不同,前者是直接读取文件进行展示,需要程序先把要展示的内容保存成文件,然后tensorboard再读取文件进行展示,后者是代码直接展示,在程序中就直接传递给visdom了。

相同点是两者都需要在程序外面启动。

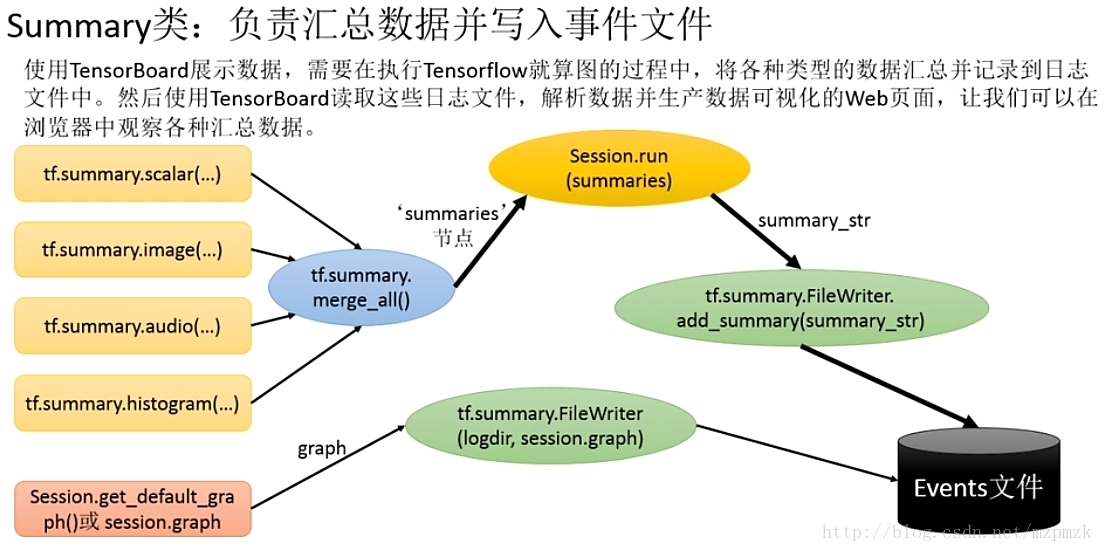

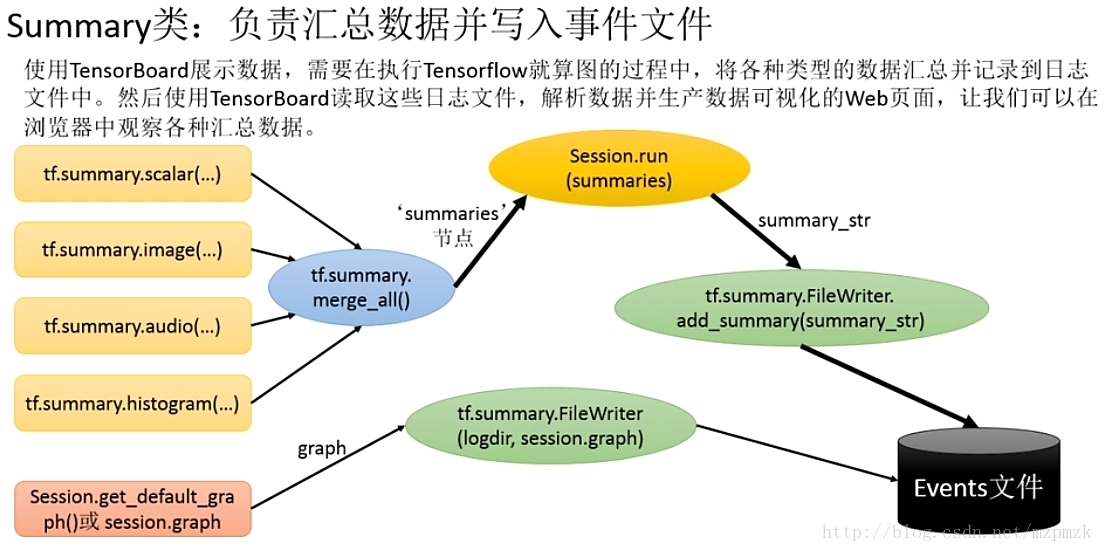

2. API以及使用流程 2.1 API

tf.summary.FileWriter——用于将汇总数据写入磁盘

tf.summary.scalar——对标量数据汇总和记录

tf.summary.histogram——记录数据的直方图

tf.summary.image——将图像写入summary

tf.summary.merge——对各类的汇总进行一次合并

tf.summary.merge_all——合并默认图像中的所有汇总

2.2 使用流程

添加记录节点:tf.summary.scalar/image/histogram()等

汇总记录节点:merged = tf.summary.merge_all()

运行汇总节点:summary = sess.run(merged),得到汇总结果

日志书写器实例化:summary_writer = tf.summary.FileWriter(logdir, graph=sess.graph),实例化的同时传入 graph 将当前计算图写入日志

调用日志书写器实例对象summary_writer的add_summary(summary, global_step=i)方法将所有汇总日志写入文件

调用日志书写器实例对象summary_writer的close()方法写入内存,否则它每隔120s写入一次, close() 之后就无法再次写入了,需要重新打开reopen(),这里可以替代为summary_writer.flush()。

3. 启动 1 tensorboard --logdir /path/to/log

4. 初始化 1 2 3 4 5 6 7 8 9 10 11 12 import tensorflow as tfwriter = tf.summary.FileWriter("/path/to/log" , sess.graph(), flush_secs = 2 ) writer = tf.summary.FileWriter("/path/to/log" ) writer = tf.summary.FileWriter("/path/to/log" ) writer.add_graph(sess.graph())

其他常用API

add_event(event):Adds an event to the event file

add_graph(graph, global_step=None):Adds a Graph to the event file,Most users pass a graph in the constructor instead

add_summary(summary, global_step=None):Adds a Summary protocol buffer to the event file,一定注意要传入 global_step

close():Flushes the event file to disk and close the file

flush():Flushes the event file to disk

add_meta_graph(meta_graph_def,global_step=None)

add_run_metadata(run_metadata, tag, global_step=None)

备注:这个event还是没有看懂怎么使用。

5. scalar 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 import tensorflow as tfsummary_writer = tf.summary.FileWriter("/path/to/log" ) sess = tf.Session() tf.summary.scalar('accuracy' , accuracy) summary_op = tf.summary.merge_all() summary_str = sess.run(summary_op) summary_writer.add_summary(summary_str, global_step) tf.summary.scalar('loss1' , 1 , family='loss' ) tf.summary.scalar('loss2' , 2 , family='loss' ) tf.summary.scalar('loss3' , 2 )

或者自定义数据

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 import tensorflow as tfsummary_writer = tf.summary.FileWriter("/path/to/log" ) summary = tf.Summary(value=[ tf.Summary.Value(tag='test2' , simple_value=0 ), tf.Summary.Value(tag='test3' , simple_value=1 ), ]) summary_writer.add_summary(summary, global_step) summary_writer = tf.summary.FileWriter("/path/to/log" ) summary = tf.Summary() summary.value.add(tag='test4' , simple_value=0 ) summary.value.add(tag='test5' , simple_value=1 ) sumamry_writer.add_summary(summary, global_step)

6. histogram 1 tf.summary.histogram('layer' +str (i+1 )+'weights' ,weights)

这里的weights可以是list型,也可以是pytorch的tensor型,其他类型没试,但可以推断,一般情况下的都可以,猜测会统一转换为numpy型。

7. image 1 2 3 4 5 6 7 tf.summary.image('input' , x_image, max_outputs=3 ) tf.summary.image('test' , tf.reshape(images, [-1 , 28 , 28 , 1 ]), 10 ) tf.summary.image('test1' , torch.rand(3 , 256 , 256 , 3 ))

备注:x_image必须是uint8或者float32型的4-D Tensor[batch_size, height, width, channels],其中channels为1, 3 或者4

对于numpy

1 2 3 4 5 6 7 8 9 10 from PIL import Imageimport tensorflow as tfimport torchfrom torchvision import transforms as Timage_ = Image.open ('path' ) image_numpy = numpy.array(image_) tf.summary.image('test1' , image_numpy.reshape(1 , 271 , 108 ,3 )) summary_op = tf.summary.merge_all() summary_str = sess.run(summary_op) summary_writer.add_summary(summary_str, global_step)

对于pytorch

1 2 3 4 5 6 7 8 9 from PIL import Imageimage_ = Image.open ('path' ) image_tensor = T.ToTensor()(image_) tf.summary.image('test9' , image_tensor.permute(1 ,2 ,0 ).reshape(1 , 271 , 108 ,3 )) summary_op = tf.summary.merge_all() summary_str = sess.run(summary_op) summary_writer.add_summary(summary_str, global_step)

uint8或者float32型的4-D Tensor[batch_size, height, width, channels],其中channels为1, 3 或者4

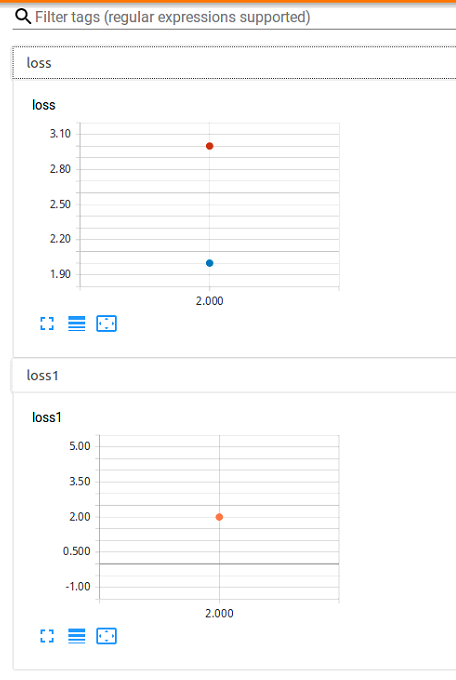

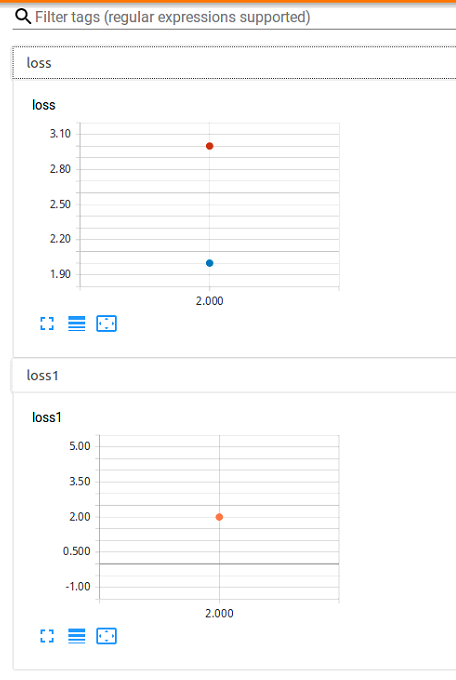

8. 多个event的可视化 如果 logdir 目录的子目录中包含另一次运行时的数据(多个 event),那么 TensorBoard 会展示所有运行的数据(主要是scalar),这样可以用于比较不同参数下模型的效果,调节模型的参数,让其达到最好的效果!

这个还有待测试

9. tensorboard_logger tensorboard_logger可以暂时理解成简化版的tensorboard,或者是tensorboard的高级API。

tensorboard_logger依赖于tensorboard。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 pip install tensorboard_logger tensorboard --logdir /path/to/log --port /port from tensorboard_logger import Loggerlogger = Logger(logdir='./logs' , flush_secs=2 ) logger.log_value('loss' , 10 , step=3 ) logger.log_images('images' , image_numpy.reshape(1 ,271 ,168 ,3 ), step=2 ) logger.log_histogram('weights' , torch.rand(2 ,3 )+10 , step=10 ) from tensorboard_logger import configure, log_valueconfigure("runs/run-1234" , flush_secs=5 ) log_value('v1' , v1, step)

10. tensorboardX https://github.com/lanpa/tensorboardX

tensorboardX也依赖于tensorflow和tensorboard。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 pip install tensorboardX tensorboard --logdir /path/to/log --port /port from tensorboardX import SummaryWriterwriter = SummaryWriter(logdir = 'tensorboard7' , comment = 'test1' , flush_secs = 1 ) writer.close()

另一种写法

1 2 with SummaryWriter(logdir = 'tensorboard7' ) as w: w.add_something()

不推荐with的写法,会自动重新创建一个文件,乱。

10.1 add_scalar 1 2 3 4 5 writer.add_scalar('loss1' , 2 , global_step=2 ) writer.add_scalars('loss' , {'loss1' :2 , 'loss2' :3 }, global_step=2 )

备注: :

SummaryWriter以及之前的类似Writer都可以自动创建文件夹 。tensorboard —logdir /path/to/log会自动迭代该文件下的所有文件夹和文件,将event展示出来,并且将同名字的scalar放在一起,可以用于对比修改前后的结果 。

10.2 add_image 1 2 3 4 5 6 7 8 9 10 writer.add_image(tag, img_tensor, global_step=None ) image_path = 'test.jpg' image_ = Image.open (image_path) image_tensor = T.ToTensor()(image_) writer.add_image('test1' , image_tensor, global_step=2 ) writer.add_image('test2' , torchvision.utils.make_grid(torch.rand(16 , 3 , 256 ,256 ), nrow=8 , padding=20 ), global_step=2 )

10.3 add_histogram 1 2 3 4 5 6 writer.add_histogram(tag,values,global_step=None ,bins='tensorflow' ) writer.add_histogram('test1' , torch.rand(16 , 3 , 256 ,256 ), global_step=3 )

10.4 add_graph 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 from torch import nnimport torchclass Net1 (nn.Module): def __init__ (self ): super (Net1, self).__init__() self.conv1 = nn.Conv2d(3 , 10 , kernel_size=3 , padding=0 ) self.fc1 = nn.Linear(10 , 100 ) def forward (self, x ): x = self.conv1(x) x = x.view(-1 , 10 ) x = self.fc1(x) x = torch.nn.functional.softmax(x, dim=1 ) return x input = torch.rand(4 , 3 , 3 , 3 )model = Net1() writer.add_graph(model, (input ,)) add_graph(model, input_to_model, verbose=False )

终于pytorch也能显示graph了,不容易,应该提前熟悉这些API的。

11. 结论 综上所述,我觉得:

tensorboardX最适合非tensorflow的深度学习框架

tensorboard适合tensoflow

tensorboard_logger也适合非tensorflow的深度学习框架,可以看成简化版的tensorboardX

tensorboardX和tensorboard_logger可以互相替换

开始使用tensorboardX作为我的新的可视化工具。

不太懂tensorboardX和tensorboard_logger之间的区别,难道是开发的人不一样?