0. 前言

这篇文章主要是通过在神经网络中加入 SE-block 来加强通道之间的关系,提高性能,理论上讲可以加入任意网络任意任务,并且这篇文章获得了 ImageNet2017 的冠军。很牛逼。

- paper: ECCV2018_Squeeze-and-Excitation Networks

- code: Caffe, TensorFlow, MatConvNet, MXNet, Pytorch, Chainer, pytorch

这篇文章清晰易懂,讲得很细(没用的话也比较多),很work,一起拜读一下。

1. Introduction

- SE block: Squeeze and Excitation block

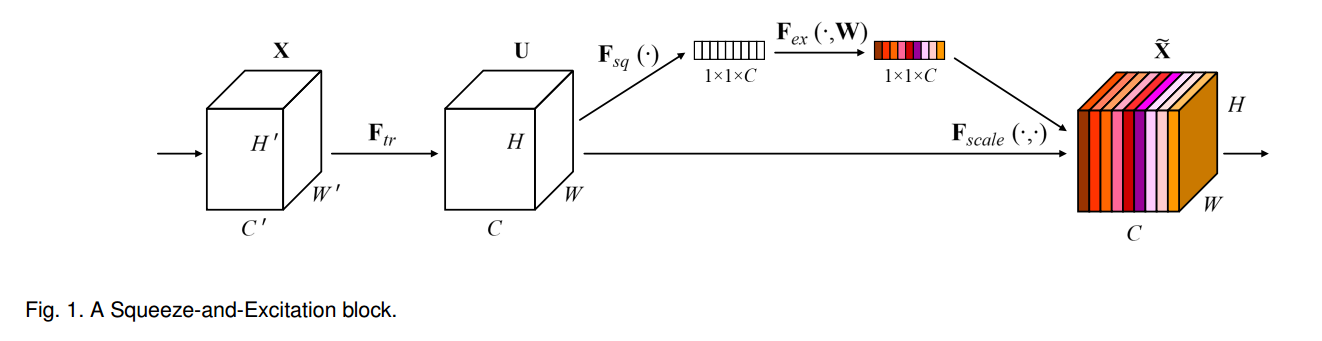

符号表达:$F_{tr}:X\to U, X\in R^{H’×W’×C’}, U\in R^{H×W×C}$

SE block 在底层时更偏向于提取任务之间的共享特征,在高层时更偏向于提取任务相关的特征。

3. Squeeze and Excitation Blocks

3.1 Squeeze: Global Information Embedding

其中,$z\in R^C$

3.2 Excitation: Adaptive Recalibration

其中,$\delta$表示ReLU函数,$W_1\in R^{\frac{C}{r}×C}$ 并且 $W_2\in R^{C×\frac{C}{r}}$,也就是两个FC层。

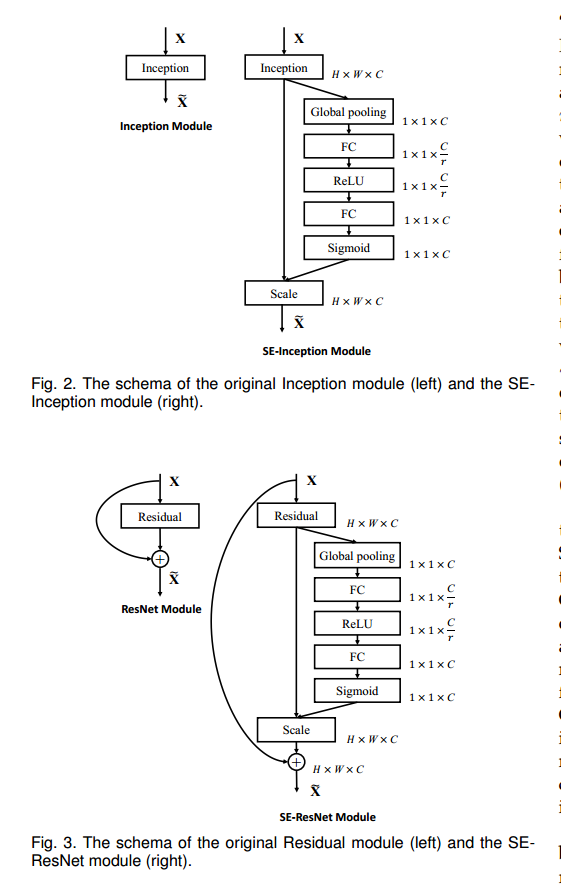

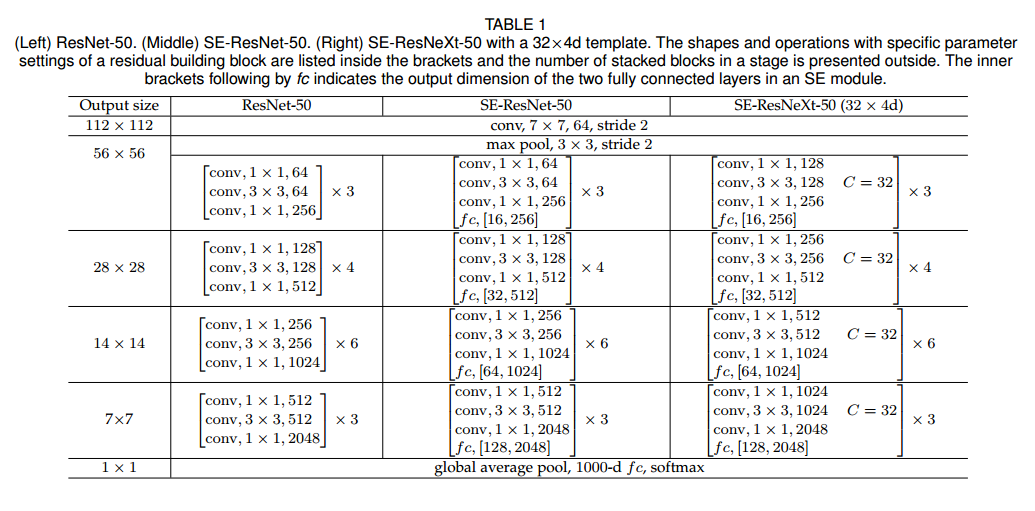

3.3 Instantiations

4. Experiments

主要是从分类等场景出发,说明了 SE block 在ResNet,Inception等各种网络和ImageNet, cifar-100等数据集上表现都好。

5. Ablation Study

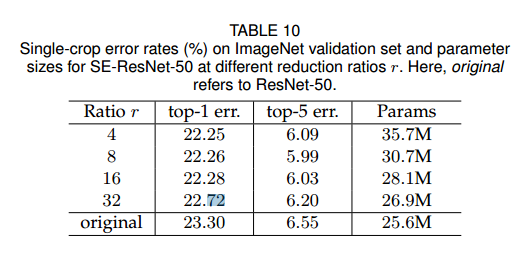

5.1 Reduction ratio

Reduction ratio r in Excitation

作者设置为r=16.

5.2 Squeeze Operator

作者只比较了max pooling 和avg pooling.这两种方法差不多。

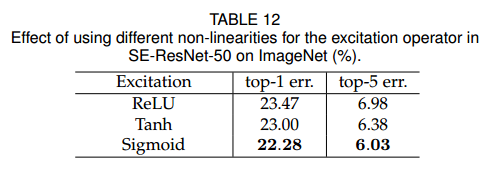

5.3 Excitation Operator

作者比较了 ReLU, Tanh, Sigmoid三种函数,实验证明 Sigmoid 函数更好一些,这里指的是第二个激活函数。

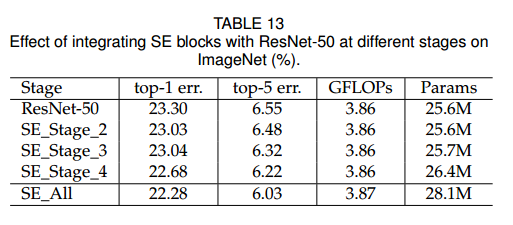

5.4 Different stages

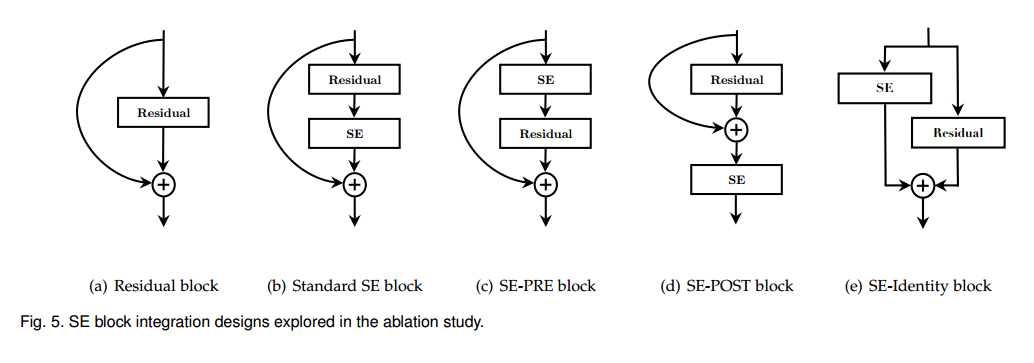

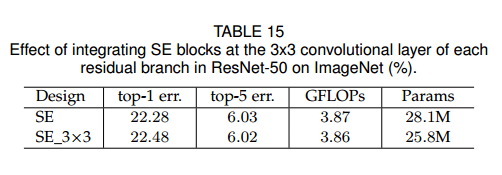

5.5 Integration strategy

6. code

代码还是很简单的

1 | # SE ResNet50 |